While we don't necessarily subscribe to all hype or hysteria around AI but we are not fools to not leverage AI for getting new traffic from all the new sources possible. If you are one of those people who are still stuck under rock and have not searched for anything in the last 2 years, you might still be doing basic SEO. AI search isn't coming. It's already here, and the numbers aren't subtle. AI-driven search traffic is reportedly up 527%, and we have seen this firsthand. The reaction so far has been predictable with experiments, prompt hacks, GEO checklists, and a lot of trial and error trying to figure out how to show up in AI answers.

What's been frustrating to watch is that while the discovery layer has changed, the way content is created and distributed hasn't. Teams are still treating CMSs like blog editors, still doing manual research, formatting, and publishing. For us, this has pushed a different kind of rethink. If AI is becoming a core distribution channel, then structure, format, and automation matter just as much as the content itself. So instead of chasing ranking tricks, we've been focused on building systems that make content easier for AI to understand, extract, and surface to roll those practices into how we ship work for every client.

Your content is being read before it's being visited

You've probably already seen this in your numbers. Traffic isn't just coming from Google search anymore. It's coming through Google AI Overviews, ChatGPT, Perplexity, Gemini, Claude, Copilot... all of them reading, summarizing, and deciding what to surface before a human even clicks a link. Which means your content now has a new primary audience. Before, the flow was simple. Write something, publish it, wait for it to rank, hope people land on it.

Now it looks more like this. Write or generate something, structure it properly, make sure it's easy to extract, let AI engines ingest it, and then maybe a human shows up later because an answer cited you. This is where things get weird.

Most sites are still built for humans first and crawlers second. Heavy HTML, random layouts, scripts everywhere, inconsistent structure which might looks great as per your view. But we are here to tell you that it reads terribly for the LLM model trying to understand what the page is actually about. AI doesn't care about your animations. It doesn't care about your gradients. It cares about clean structure, predictable formatting, and content it can parse without fighting your frontend.

So if your content is hard to extract, you're not just losing SEO. You're making it harder for AI systems to understand you, quote you, or recommend you at all. And right now, that's where a growing chunk of discovery is happening.

Becoming "AI-native agency"

This is one of those phrases that can get cringe very quickly, so let's keep it practical. When we say we're pushing to be an "AI-native" agency, we don't mean writing everything with AI or turning every blog into a prompt experiment. It just means accepting that AI is now part of how content is created, discovered, read, and redistributed. For years, content was built for humans first, search engines second. Now the first reader is often a model, and the human comes later if you get cited. That flips the priorities. Structure matters more. Consistency matters more. And the amount of manual busywork teams still do starts to look a bit outdated.

So instead of thinking "How do we rank this?", we've started thinking "How easily can this be understood, extracted, and distributed?" That's the shift. How we're actually implementing that comes next.

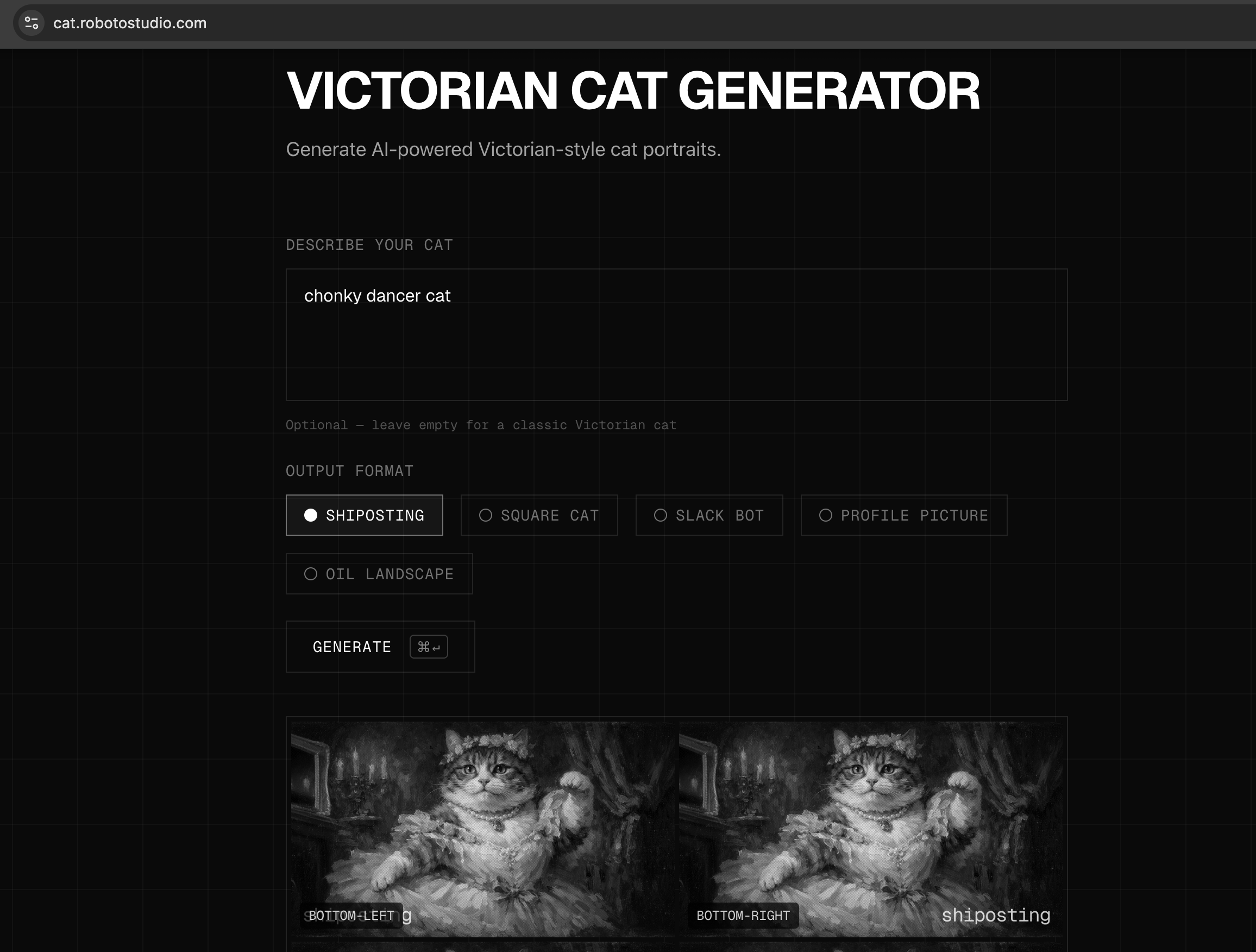

With this same ideology, we also built our own image generation tool. Not the "prompt it once and hope for the best" kind. Not the slop you see flooding LinkedIn with six-fingered executives shaking hands in front of imaginary dashboards. Ours is structured, customizable, and built so teams can define style, constraints, and output rules properly. More broadly, we're not interested in shouting "AI, AI" like it's a feature in itself. We're interested in building systems that remove repetitive work.

If something takes too long, we don't schedule a meeting about it. We automate it. This is not just for clients but for ourselves too. Because if we wouldn't use the workflow internally, we're certainly not shipping it to anyone else. We also build AI agent using Vercel workflows to turn form submissions into fully enriched, qualified leads.

Loading video player...

How are we building our content system?

Enough theory, now let's get to the practical.

Dual routes

This is the part we've started baking into every build by default. Every piece of content now ships in two formats:

- Human version:

/blog/post-name - AI-friendly version:

/blog/post-name.md

The normal route is your typical web page. The .md route strips all of that out for a clean and structured content. Headings stay intact. Tables stay readable. Lists, links, and sections make sense without needing to interpret the UI. Which means AI engines can fetch a version that's far easier to parse, summarize, and cite.

We're not doing this as an experiment anymore. It's quietly becoming a baseline for us and across client builds. If models are going to read your content first, you might as well give them something clean to read instead of making them fight your frontend.

llms.txt as an AI discovery layer

This is another thing we've started treating as standard, not experimental. Right at the root of the site, we place a simple file: /llms.txt. It's not fancy. It's not visual. It's just a clean, Markdown-based content map that explains what the site is about and where the important stuff lives. Think of it like a sitemap, but written for models instead of search engines.

Inside it, we highlight things like:

- Key pages worth reading

- Core topics the site focuses on

- Important routes and sections

The purpose is straightforward. It gives LLMs a quick understanding of what your site covers without making them crawl through layers of HTML, navigation, and layout logic. We treat it as a semantic sitemap for AI. Basically, it is just a cleaner way of saying, "Here's what this site is about, and here's where to start."

Don't ignore MCPs

MCP or Model Context Protocol, showed up quietly in late 2024. Anthropic introduced it, OpenAI and Google followed, and now most serious platforms are building around it. The simplest way to think about it is by just accepting that it's a standard way for AI to talk to your tools. Like USB-C, but for context.

Instead of AI guessing what your content looks like, it can understand your schemas, query your CMS, and stage changes with actual awareness of how your data is structured. Sanity ships an MCP server with dozens of tools. Contentful has one. WordPress.com announced support. The pattern is obvious with CMSs now becoming systems AI can work inside, not just pull text from.

And we're already using this in real work.

Renaming Sanity Create

We recently renamed "Sanity Create" to "Sanity Canvas" across a large content set. Normally that's a mix of run global find-and-replace, miss a few instances, break a few references and someone spotting a leftover label weeks later. This time, it was one conversation.

We just used Claude Code and MCP. We described the change in plain English. Claude, connected to the Sanity MCP server, understood the schema, located every instance across documents, and staged edits as a proper content release. You are allowed to call this our party trick.

What actually happened:

- AI found every reference across structured content

- Proposed changes instead of pushing them live

- Grouped everything into a reviewable release

- Zero manual hunting through hundreds of entries

Migrating the stuff nobody wants to touch

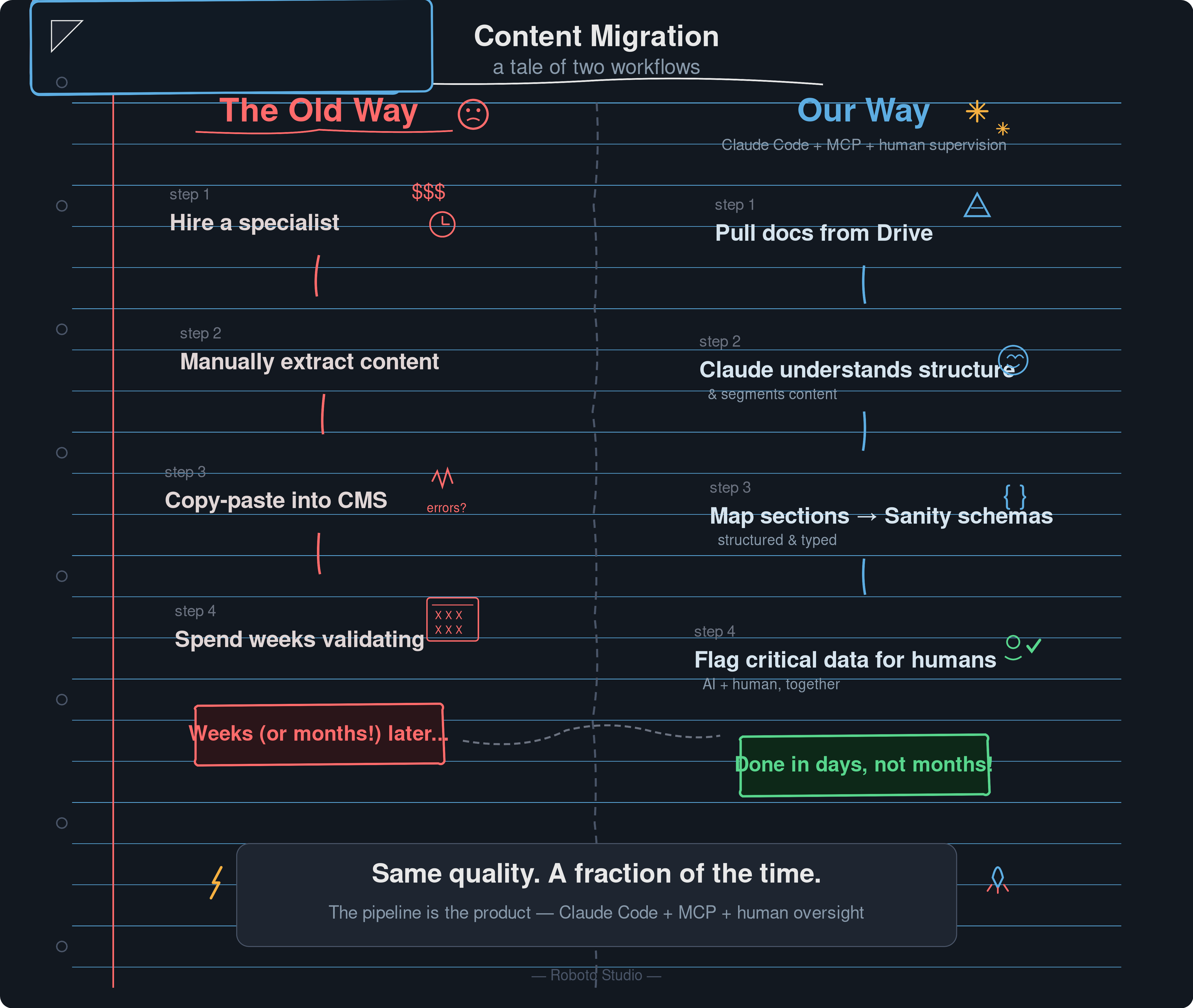

We also used MCP-driven workflows while migrating ~80 pharma documents from Google Drive into structured content and we are talking about technical documents consisting of clinical data buried mid-sentence, tables, references, compliance-sensitive numbers, etc. The AI handled extraction, restructuring, and mapping content into proper models. We handled verification. We built a pipeline that pulled documents from Drive, Claude understood structure, segmented content, mapped sections into Sanity schemas and flagged critical data for human verification.

Important part, this wasn't fully automated. And it shouldn't be for pharma. Every critical number was flagged. Every sensitive data point was cross-checked. What would've taken months of careful manual work became a few days of supervised automation with a clean audit trail. The output was better structured than a pure manual migration.

Moving to content generation

We've talked about infra, pipelines, MCPs, dual routes, and all the fun stuff that makes developers nod aggressively. Now this bit is for the marketers.

If you're not generating content for your team, you can skip this. If you are, this is where most teams quietly waste 60% of their time and pretend it's "strategy." We don't "write content" from scratch anymore. We build systems that generate it properly.

1. Research

We use AI to do structured research before we write anything. Instead of manually reading 12 articles and pretending we absorbed all of them, we have Claude generate a markdown brief with:

- Source links

- Key arguments

- Contradictions

- Gaps in coverage

We are not asking it to write the blog. We are asking it to read the internet so we don't have to. For technical topics, this is gold. The AI consumes the dense documentation and release notes. We decide what actually matters. It does the digestion. We do the thinking. Division of labour. Very civilised.

2. Image generation

We generate images early in the workflow. Why?

Because waiting three days for a hero concept while everyone "aligns on direction" is not a system. It's theatre. We generate visual directions in seconds. Then a designer refines or replaces it if it needs to go live. AI basically removes friction and Designers help keep taste. If you're threatened by that, I've seen your Figma files. You'll be fine.

3. SEO field automation

We hate wasting our time for repeated stuff like filling all fields in blog. We know Meta titles, descriptions, Alt text. TL;DR and FAQs are important but doesn't mean writing them is an enjoyable task. So we automate them. We use Sanity AI Assist directly inside the CMS.

That means:

- Structured content already lives in Sanity

- AI Assist understands the schema

- It generates fields in context, not in isolation

We use it to generate:

- Intent-aligned meta titles

- Clean, non-cringe descriptions

- Proper alt text (not "image-final-v3.png")

- TL;DR summaries

- FAQ blocks aligned to actual query patterns

This isn't "spray AI over content and hope it ranks" and because it's inside Sanity, everything is reviewable, nothing auto-publishes and editors stay in control. It's using structured content + schema-aware AI to fill the fields everyone forgets. Because "we'll add that later" is the most successful content killer of the last decade.

4. Cron jobs that actually earn their keep

Someone publishes an article at 6:42 p.m. on a Friday. They spell "Next.js" three different ways. Nobody notices. Six months later, that article ranks. Now the inconsistency is permanent. Multiply that by 200 posts. That's how sloppiness scales.

No one deliberately writes inconsistent content. It just happens. There are different authors, moods and based on different levels of caffeine. Over time, terminology drifts. Brand names mutate. Hyphens appear and disappear like they're optional. And nobody is volunteering to manually audit everything. So we stopped pretending we would.

We built a scheduled system that scans all published content weekly. It checks for naming inconsistencies, spelling drift, and terminology chaos with lot of vibes and rules. It generates a report. We review it and fix what matters. This can't be negotiated especially when AI models are crawling your site and building an understanding of your brand from patterns. If those patterns are messy, that's on you.

5. Freshness monitoring

There's a special kind of confidence you get from an article that ranked well in 2023. It's the dangerous kind. You assume it's still fine with accuracy and relevance. After all, it hasn't broken. Traffic is steady. Meanwhile:

- The framework shipped two new versions.

- The stat you quoted has been revised.

- The "official documentation" link now points to a polite 404.

And your article is still there. Calm. Slightly wrong. That's how credibility erodes. No one is manually auditing every post every few months. If you say you are, you either have an intern army or too much free time. So we automated it. We run a scheduled freshness scan across all published content. It checks for outdated version references, ageing statistics, and broken links and it tells us exactly what changed so we can fix it properly.

6. Traffic analysis

Finally, most teams analyse traffic in one tool and manage content in another. Then decisions happen in Slack based on whatever feels true that week. We wired PostHog's MCP directly into Sanity so we can ask simple questions like which Q4 posts are declining, which high-traffic articles don't convert and get answers tied to actual CMS documents, not anonymous URLs. It's still slightly scrappy under the hood, but it's already saving us hours.

The economics are real

On paper, AI content looks like a steal. Around $131 per post compared to roughly $611 for human-written. That's a 4.7x difference. Deloitte says marketers save 3 hours per piece of content. CoSchedule's 2025 survey found 84% of respondents reported faster delivery.

MIT research shows a "J-curve" where AI adoption initially decreases productivity before improving it. The recovery typically takes 4+ years for established companies. A Danish study of 25,000 workers found "virtually no impact" on wages or employment two years into the ChatGPT era. But the study stating that while AI users complete 21% more tasks, review time increases 91%. The bottleneck just moves. 70-85% of AI initiatives fail to meet expected outcomes. That's not pessimism, that's MIT and RAND Corporation data.

So yes, the economics are real. But only if you build workflows that capture the efficiency gains without creating new bottlenecks. Most teams don't. If you want AI systems that actually reduce cost without creating new chaos, get in touch. We'll help you set it up properly and not just sprinkle AI on top and call it innovation.

Get in touch

Book a meeting with us to discuss how we can help or fill out a form to get in touch